Do you need a way to add intelligent, real-time conversation to your app? Thousands of developers have already connected apps to ChatGPT successfully. With the right structure and strategy, you can build your own ChatGPT-powered app without delays or confusion. This guide shows how to integrate ChatGPT into an app using a clear process that works across industries. It covers the core methods, tools, and design principles that help you achieve a smooth and scalable setup. You will also learn how to integrate AI into an app using the OpenAI API, add advanced features, and stay in control of privacy and cost.

Why businesses are adding ChatGPT to apps

More than 92% of Fortune 500 companies already use OpenAI tools in production, according to recent data. The rise of ChatGPT shows that smart automation no longer stays on the sidelines. Companies now look for scalable ways to improve support, boost sales, and personalize user journeys—and ChatGPT fits that need. When teams ask how to integrate AI into an app, they often focus on the technical steps. But the real value comes from what happens after the integration. ChatGPT answers questions instantly, adapts to tone, and even learns from context. These features turn static interfaces into responsive, human-like experiences that build trust.

ChatGPT integration with websites and mobile apps helps reduce support load, streamline onboarding, and drive conversions. Some businesses report over 50% cost savings on customer service and faster ticket resolution times. The gains grow even more when companies combine ChatGPT with voice, search, and recommendation systems. Learning how to integrate AI into an app unlocks more than chat—it creates new ways to deliver value. And as more companies adopt conversational tools, those who wait risk falling behind.

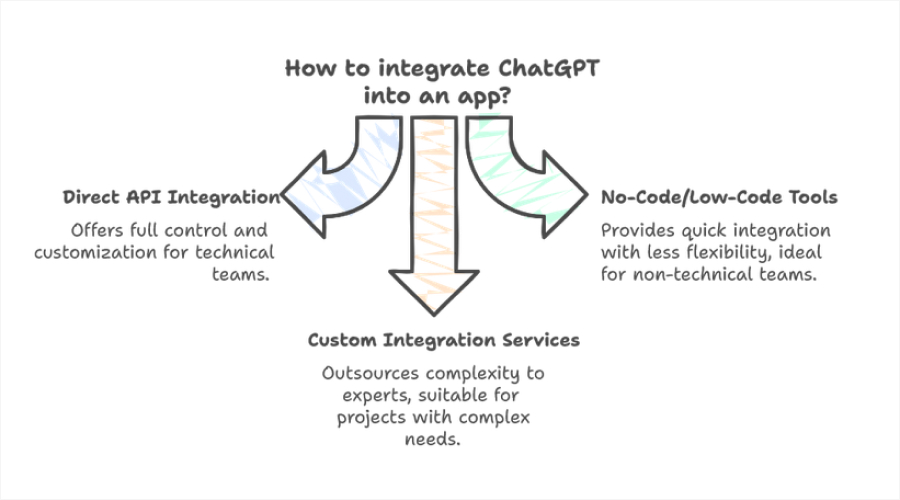

ChatGPT integration approaches

There’s no one-size-fits-all method for integrating ChatGPT into your app. Some teams prefer full control and go with custom code. Others want to move fast without writing scripts, so they pick no-code tools. Larger projects may require professional AI development teams to handle system complexity and compliance requirements. Each method works, but the setup, flexibility, and long-term impact differ. To learn how to integrate ChatGPT into an app successfully, your team needs to weigh effort vs. value across three main approaches.

Direct API integration

This option works best for development teams that want full control. You connect to the OpenAI API, write server-side logic, and define how ChatGPT interacts with users. This path allows deep customization—everything from system prompts to model selection. Developers who know how to integrate ChatGPT into an app at this level can fine-tune outputs, manage tokens, and align the model with business logic. It also allows you to combine ChatGPT with internal databases or other AI modules through middleware or vector search systems.

No-Code and low-code tools

Platforms such as Zapier or Bubble provide quick access to ChatGPT features with little or no code. These tools manage most of the setup through visual workflows. While they reduce flexibility, they help non-technical teams add ChatGPT to websites or internal dashboards rapidly. This method suits proof-of-concept work, MVP releases, or support automation without requiring developers. However, teams that want to scale or modify advanced logic often migrate to a full-code setup.

Custom ChatGPT integration services

Some companies outsource the work. Agencies and AI development firms build end-to-end systems that match business requirements across industries. This option fits projects with complex backends, regulated data, or AI-specific product needs. It also suits teams who prefer expert support for long-term scaling. These vendors often handle everything—from model configuration to prompt engineering, privacy compliance, and UI/UX optimization—removing technical burden from internal teams.

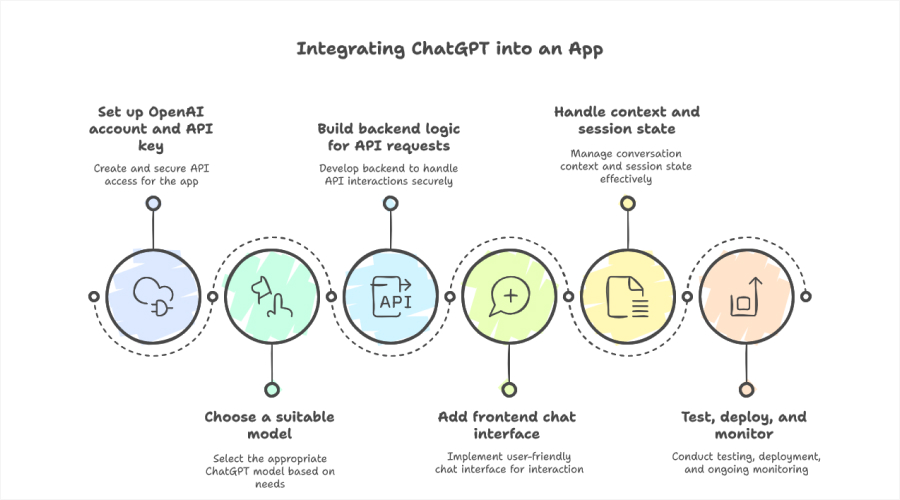

How to integrate ChatGPT into an app

The steps below apply to most app types—whether you’re creating a customer support assistant, a tutoring feature, or a recommendation agent. Each part of the flow—from API setup to UI delivery—requires careful planning and secure implementation. A front-end development services company can help the UI deliver a seamless experience aligned with your AI capabilities.

Step 1. Set up your OpenAI account and API key

Go to platform.openai.com, sign in or create a new account, and navigate to the API Keys section. Click “Create new secret key” and copy the key securely. This key is your app’s pass to access OpenAI services. Store the key in an environment variable or secure vault. Avoid hardcoding it or exposing it to the frontend. If attackers gain access, they can misuse your account and run up costs. Teams often use tools like AWS Secrets Manager, HashiCorp Vault, or doting files for safe storage in development environments.

Also read: What Is OpenAI’s ChatGPT, And How Can You Use It?

Step 2. Choose a suitable model

OpenAI offers several models under different pricing tiers and capabilities:

- GPT-3.5: Cost-effective, handles short queries well.

- GPT-4 Turbo: Optimized for complex tasks with lower latency and lower cost than GPT-4.

- GPT-4o: Supports voice, image, and text—ideal for multimodal interactions.

Choose a model based on expected load, performance needs, and budget. For example, apps that handle basic FAQs can run GPT-3.5, while apps that need reasoning and contextual memory work better with GPT-4 or GPT-4o. Review the OpenAI pricing page to estimate token usage and monthly spend.

Step 3. Build backend logic for API requests

The backend connects your app to the ChatGPT API securely. It receives messages from the user, packages them into a format the API accepts, and sends them to OpenAI’s servers. After the API generates a response, the backend returns that response to the frontend for display. To handle this properly, set up a route that processes incoming messages and includes parameters like the model name, conversation history, and any custom instructions for tone or behavior. Make sure to return only the relevant output from the model—this keeps responses clean and reduces unnecessary load.

Always monitor for API errors. Rate limits, timeouts, or invalid requests can cause failed responses, so your backend should handle these cases gracefully. Use retry logic to recover from temporary issues, and log errors to help diagnose problems quickly. This layer plays a key role in managing response quality, privacy, and performance.

Step 4. Add a frontend chat interface

Build a clean, responsive interface that encourages interaction. You don’t need complex animations or advanced frameworks to get started. A standard HTML/CSS layout with a message window, input field, and send button works well. Use JavaScript to capture the user’s message and send it to your backend route. Show a loading indicator during the API call and display ChatGPT’s reply when the response arrives. For longer sessions, render a conversation history panel so users can scroll through the exchange. If you support mobile users, prioritize tap targets and vertical layout flow.

Step 5. Handle context and session state

Every ChatGPT call requires context. Without it, the model loses track of what the user asked earlier. Append the last few interactions to each request using the messages array structure. The system message can define assistant behavior (“You are a customer support assistant for a SaaS company”), while user and assistant messages show the flow. To avoid hitting token limits, truncate older messages or summarize them on the server. You can also store interaction history in a database and retrieve it per session. This gives your app memory across visits, which helps in support, onboarding, and personal assistant scenarios.

Step 6. Test, deploy, and monitor

Run local tests before going live. Validate response formats, error handling, and fallback logic. Simulate bad inputs, timeouts, and malformed requests to uncover edge cases. Use tools like Postman, Jest, or Mocha to automate basic validation.

Once the integration works end-to-end, deploy it to staging and test under real usage. Measure latency, memory use, and API cost. OpenAI’s dashboard shows token consumption per request—track this to stay within budget. Set up alerts for error rates, and monitor logs for repeated failures or user friction points.

How to customize ChatGPT for business needs

Once the base integration works, the next challenge is to make ChatGPT useful inside your product. Raw output from the API often feels generic. To align the experience with your brand, you need to adjust its tone, content, and behavior. Customization helps your app speak in the right voice, focus on the right topics, and meet the specific needs of your users.

1. Set the right system behavior

Every ChatGPT conversation starts with a system prompt. This prompt defines how the assistant behaves. For example, a support assistant might follow:

“You are a helpful support agent for an e-commerce platform. Answer concisely and prioritize verified customer data.”

This message controls tone, formality, and intent. You can change it based on the feature, department, or user type. Clear and specific instructions produce consistent results.

2. Train with internal or industry content

ChatGPT does not train on your documents by default. If you want it to answer product-specific or policy-related questions, you must provide context. One method uses embeddings: break documents into chunks, convert them into vectors, and store them in a database. At runtime, retrieve relevant chunks and include them with the prompt.

This process—called Retrieval Augmented Generation (RAG)—keeps the model current without retraining it. It works well for help desks, onboarding tools, and sales assistants.

3. Personalize the responses

If your app tracks user activity, preferences, or history, you can pass that context to ChatGPT to deliver best replies. For example, a fitness app can use past workout data to adjust coaching advice. A finance app can reference recent transactions.

Personalization improves relevance and makes conversations feel less scripted. Just make sure to manage data securely and anonymize where possible.

4. Align tone and language with your brand

ChatGPT can sound casual, formal, technical, or playful—depending on how you frame the prompt. For B2B apps, you might want short, professional answers. For a lifestyle product, you might prefer something warmer or more conversational.

Use controlled testing to fine-tune tone. Share sample outputs with your content or support teams to validate language before launch.

5. Iterate based on real feedback

Once users start interacting with ChatGPT, collect data. Identify repeated questions, confusing replies, and points where the assistant fails. Use this input to adjust prompts, add fallback logic, or change how you fetch context.

Successful teams treat this like product development. They track changes, test alternatives, and keep improving the assistant over time.

Conclusion

ChatGPT integration no longer requires a deep AI background or complex infrastructure. Teams that understand how to integrate ChatGPT into an app can quickly build tools that support users, automate tasks, and personalize experiences at scale. As more apps adopt conversational AI, the gap grows between products that feel static and those that respond in real time. Businesses that learn how to integrate AI into an app today gain an edge in usability, automation, and customer loyalty. Integration is just the starting point—the long-term value comes from how well you customize, scale, and secure it.

FAQ

1. Does ChatGPT work with existing CRMs or platforms?

Yes, ChatGPT can integrate with most CRMs and platforms through APIs. Developers use middleware or custom connectors to route data between systems like Salesforce, HubSpot, or Zendesk and ChatGPT. This setup allows the assistant to pull user records, update tickets, or generate replies based on stored data. Teams that know how to integrate AI into an app often extend that logic into internal tools. Just make sure to apply access controls and validate data before using it in prompts.

2. How secure is the ChatGPT API?

The ChatGPT API uses HTTPS encryption and requires secret keys for all requests. OpenAI does not store or use data from API calls to train models by default. To protect user data, you should store API keys in environment variables, sanitize inputs, and follow standard authentication practices. For enterprise use, you can add layers like role-based access or token expiration. Security depends on both OpenAI’s backend and your app’s architecture.

3. Can I embed ChatGPT into a website?

Yes, you can embed ChatGPT into any website by building a frontend chat interface and connecting it to your backend API route. The backend sends user input to OpenAI and returns the response for display. Many teams choose to use JavaScript or frameworks like React for this interface. You can also use no-code tools for simple sites or rapid prototypes. ChatGPT integration with websites helps improve onboarding, automate support, or guide users in real time.